I witnessed a dramatic boost in the performance of my website after switching to a Sydney-based server. In hindsight this is hardly surprising, but it illustrates how the performance of a website is dictated by more than pure bandwidth. My website is made with static HTML, which mitigates server-side lag under load. The main bottlenecks should therefore be the size of the content, and the number and latency of any requests for that content. In this post I describe how I addressed these performance bottlenecks.

Working from Yahoo’s guidelines, there are a few key principles for addressing latency and bandwidth bottlenecks:

- Minimising HTTP requests by combining separate files

- Adding an ‘expires’ header to each file to encourage caching

- Compressing HTML content using gzip

- Minifying, combining, and compressing CSS and javascript files

- Using a CDN to minimise latency

In addition to Yahoo’s guidelines, I also changed my Hyde template so that javascript and CSS would load as-needed. I’ll briefly describe how I implemented each change.

Limiting Requests

To minimise content requests, I cut two of my embedded fonts and added a local option for the third, ensuring that local versions would be used in preference to that from my server 1. I then consolidated all CSS and javascript into a few discrete files, each intended to perform a specific task:

- Handle retina images (using retina.js)

- Implement syntax highlighting of code (using highlight.js)

- Display images as a gallery (using fancybox)

- Render LaTeX math statements (using mathjax)

By dividing up the javascript/CSS in this way, only those files needed for a specific page must be transferred. To achieve this, I wrote a markdown header plugin for Hyde that would scan the markdown file and update the metadata with the type of content on the page. I then adjusted my Hyde template so that each javascript/CSS pair would load conditionally, based on the file metadata.

For example, to display code with syntax highlighting, I indent the code by fours spaces in my markdown file (the last two lines here):

# Post title #

#import <stdlib.h>

int main () {…}

This is detected by my markdown header plugin, which adds the metadata ‘code: true’ to the markdown file. Based on this metadata, the javascript and CSS for syntax highlighting are conditionally included in the HTML via the following Hyde template

'{'% if resource.meta.code -'}'

<script src="/media/js/highlight.js"></script>

<link rel="stylesheet" href="/media/css/highlight.css">

'{'%- endif %'}'

Which loads the files highlight.js and highlight.css, used to parse <code> elements for syntax highlighting.

After paring the number of files to a minimum, I then made files as small as possible by minifying and compressing them.

Minifying and Compressing Files

Minifying CSS and javascript removes all whitespace and unnecessary characters from the files. This process only reduces the file size by 5-20%, but it’s trivial to do and therefore worthwhile. I minified all CSS and javascript using cssmin and jsmin, respectively. You can find the Hyde plugins I wrote for cssmin and jsmin on GitHub. Once the files were minified, I then compressed them.

Compressing files is familiar even to novice computer users, but the underlying algorithms can be quite complex. Suffice to say that, files generally take up more space than strictly necessary, and tools like gzip help.

To compress my files, I hacked this python script to work as a Hyde publisher. I’ve uploaded the modified script to GitHub. I added HTML, SVG, and TTF (fonts) to the list of files the script compressed (javascript and CSS).

The final script walks the site, compresses files of the permitted type using gzip, and then uploads the whole site to S3. It sets the content headers of files as they are uploaded, which:

- Indicates the file type (e.g., Content-Type: applicaiton/javascript)

- Improves file caching (e.g., Cache-Control: max-age 63072000, Expires: Fri, 05 Dec 2014 05:30:01 -0000 GMT), and

- Indicates whether files were compressed (Content-Encoding: gzip).

The gzip compression performed on uploaded files reduced their size by a factor of three. I opted to leave binary image data (JPEG/PNG) uncompressed, as the time required to decompress them seemed to exceed any bandwidth gains.

Now that I was transmitting as infrequently and as little data possible, my only remaining hurdle was minimising the latency. To do this, I used a content distribution network (CDN).

Content Distribution Network

A CDN replicates a file across multiple servers around the world, thus minimising the time to transport the file between any given computer and the nearest server hosting the file. Amazon offers a CDN called CloudFront as part of their suite of web services. As with Amazon S3, which I use to host my site, the cost of CloudFront is based on usage. My website is likely to be viewed by about 1 person per month (yours truly), which means I get the latency benefits of a snappy CDN for only a fraction of a cent per month 2. There is a nice tutorial showing how to link CloudFront to an S3 site, but it’s actually better to follow this article if you want to maintain a custom 404 page and map bare URLs to the index.html files they enclose.

After playing with CloudFront for a couple of days, I was annoyed by the time taken for changes to filter through the CDN, so I opted to set the ‘Expires’ and ‘Cache-Control’ headers of HTML files to refresh every 12 hours, and leave images, javascript, and CSS (which change infrequently) at 100 days. Obviously 12 hours is too slow to push rapid, minor edits, but I’m only posting to my site about once a fortnight.

Wrap-Up

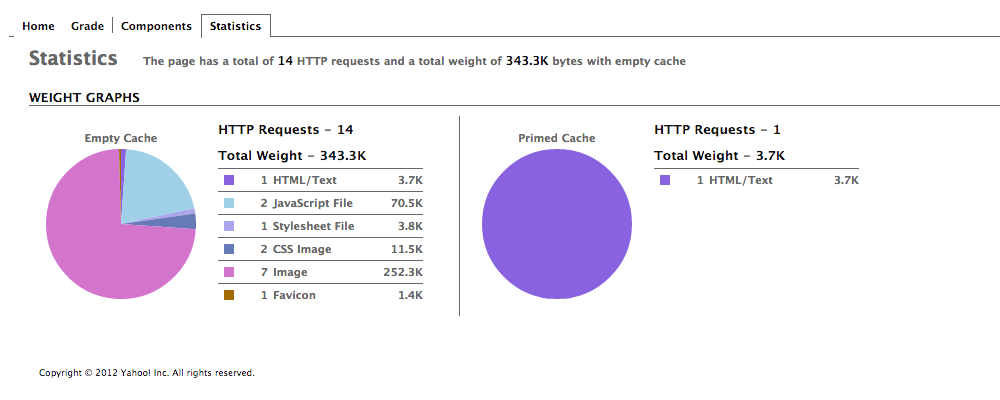

After making these changes, I scored all A’s on Yahoo’s YSlow website speed analyser (click the above image to see the results). All told, I’m extremely pleased with my efforts. And best of all, this little foray into web design is only costing ~1c per month, and it’s lots of fun!

I chose to embed HelveticaNeue-Light because some browsers do not have a native version, and their fall-back options don’t go with my lazy, minimalist design ↩

UPDATE: Actually, AWS are a little sneaky, although the total cost equates to a fraction of a cent per month, they round up the cost in each region to one cent so that you pay around 10 cents per month at a minimum. ↩